This is a brief explanation of the following concepts and how they relate to the latest interest in AI:

- Artificial Intelligence (AI)

- Generative AI (GenAI)

- Machine learning (ML)

- Deep learning

- Neural networks

- Large language models (LLM)

- Natural language processing (NLP)

- Artificial General Intelligence (AGI)

- Superintelligence (ASI)

Nobody really knows what artificial intelligence (AI) means, but it sure sounds impressive - and, more importantly, easy to sell. The origin of the term in 1956 was about getting funding, and this has been true ever since. This is why people who aren't trying to sell you something tend to call them large language models (LLM) instead.[1] One old piece of wisdom in AI is that whenever something becomes too common or well-understood, it stops being considered AI (Mitchell; Wikipedia). Another one is that whenever the impressive promises of AI inevitably fail, there comes another AI winter. Narayanan and Kapoor put it like this on the first page of their book AI Snake Oil:

Imagine an alternate universe in which people don’t have words for different forms of transportation—only the collective noun “vehicle.” They use that word to refer to cars, buses, bikes, spacecraft, and all other ways of getting from place A to place B. Conversations in this world are confusing. There are furious debates about whether or not vehicles are environmentally friendly, even though no one realizes that one side of the debate is talking about bikes and the other side is talking about trucks. There is a breakthrough in rocketry, but the media focuses on how vehicles have gotten faster—so people call their car dealer (oops, vehicle dealer) to ask when faster models will be available. Meanwhile, fraudsters have capitalized on the fact that consumers don’t know what to believe when it comes to vehicle technology, so scams are rampant in the vehicle sector.

Generative AI (GenAI) is a term specific enough to be useful. It simply means AI that generates things like text, images and audio. You know these; they have been on the news lately. ChatGPT needs no introduction. Today, the best-ranked, state-of-the-art (SOTA) model for text generation is Anthropic's Claude 3.5 Sonnet. I would give more examples, but the landscape changes every week.

Most AI, however, is not generative. Some examples of AI that is not generative include: the software in your phone that recognises your face in pictures, the software your bank uses to block your card if you make weird purchases and the software you use to make your scanned pdfs selectable. We don't think of these as AI simply because they have become common. One (actual AI) algorithm you might think of as AI is The Algorithm - the recommendation engines used by social media platforms.

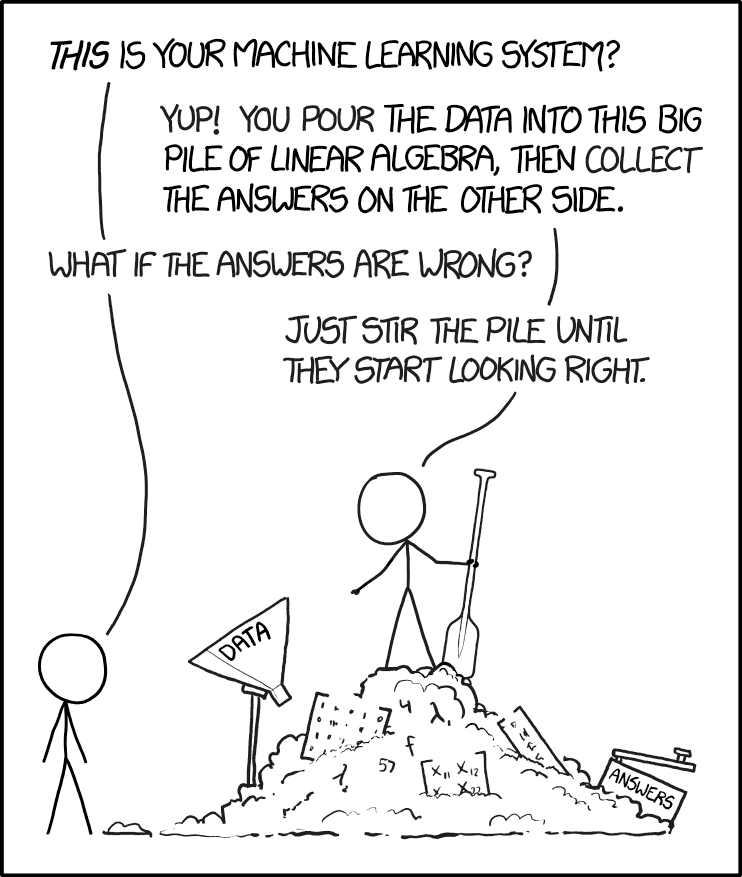

Most computing is done by giving precise instructions to the computer so it (hopefully) does what you want. Machine learning (ML) is the area of computing that uses algorithms that learn how to perform tasks from the data itself, without explicit instructions. This is useful for some tasks in which determining the instructions beforehand is either hard or impossible. The result of the learning process is called a model. A lot of what used to be called statistics (and today is called data science) is developing models.

Deep learning is a subset of machine learning which uses neural networks to accomplish the same goal of learning instructions from the data. Neural networks are models whose architecture was (loosely) inspired by biological neurons. They are "deep" because they have many layers. Deep learning has been enjoying its time in the sun during the last ten years - yes, even before ChatGPT. While the concept itself is not that new, deep learning is all about scale. It was only recently that we started producing the data and hardware to make it useful.

The difference between ML and DL is that in traditional ML, the models learn how each feature influences the result, but the relevant features to be modelled are predetermined by humans. If you want to predict how a house's location affects its price, you give it a bunch of locations and it learns the influence of location on house prices. With more features (number of rooms, proximity to the sea), you can get better predictions. While we use the humanistic term "learning", this is done by using (relatively simple) maths.

In DL, the models learn not only the amount of influence that each feature has, but also learn which features are relevant at all. The humans do not determine the features; the model learns them from the data itself. This is why people call deep learning models black boxes: humans are unable to interpret them because we don't understand what the features they are modelling even are. The models' internal representation of the features is completely mathematical - just a bunch of tables with numbers - and we have no idea what they "mean". We just know it outputs the right results, but not how or why.

ChatGPT is a language model. Language models are models that deal with language - usually human language, falling under the umbrella of natural language processing (NLP). Most other machine learning deals with dates, prices and other numbers that can make you a lot of money if predicted accurately.

Large Language Models (LLM) are language models which are large. GPT-2 has 1.5 billion parameters; GPT-3 has 175 billion; GPT-4 has over 1 trillion. (Don't worry about what parameters are). While the small ones are cute but unimpressive, their performance seems to increase with scale. While previous models were trained for specific tasks (recommendation, prediction, translation, summarization), ChatGPT can do all of these without specific training and have better performance than previous models in their respective tasks. And it is available right now. On your phone. For free.

Going back up the chain of abstraction: ChatGPT is a large language model, which is a type of neural network, which is the object of deep learning, which is a specialization of machine learning, which itself is under the umbrella of data science, which is some kind of intersection between computer science, statistics and mathematics. Artificial intelligence can be any of these things, depending on who you need funding from.

While we can more or less understand what is going on in ML, nobody quite understands what goes on inside neural networks. We are talking about mysterious hyperdimensional machines that can predict the future and/or understand language - things we thought to be exclusive to humans. You can probably see why people are making a religion out of this.

Which brings us to artificial general intelligence (AGI). AGI is general in contrast to the intelligence of a typical machine learning model. These models are narrow: the best chess AI in the world can consistently beat the best human players, but it can only play chess and nothing else. People, on the other hand, can learn all kinds of things. In this context, you can see how the advent of ChatGPT might make people see sparks of generality. It has all kinds of unexpected "emergent properties" and is surprisingly not-narrow.

OpenAI's stated objective, as a company, is to develop AGI. According to their definition, AGI is a "highly autonomous system that outperforms humans at most economically valuable work". It emphasizes a) the system being autonomous and b) its societal impact. To me, it sounds a lot like a stand-in for "we built an artificial human".

Superintelligence, on the other hand, is about the singularity. The singularity is the predicted moment where the AGI learns to improve itself. This would kick off an exponential loop of improvement. At this point, we can no longer understand or control it, and the machine becomes a god. This is the topic of a lot of "AI philosophy": how to align the machine god's interests with our "human values" ((Do not get me started on what "human" "values" is supposed to mean.)). This is called alignment. Its objective is to mitigate existential risk (x-risk).

I don't care much about the difference between AGI and ASI. Neither do I care for the difference between those who believe AGI will save the world and those who believe it will destroy it. Its purpose in the discourse is the same: it takes all the air out of the room. It diverges all the attention from real problems (the non-existential risks; see Weidinger et al, 2022) to those speculative problems. I don't think this is controversial; here is an article on Science and another on Nature regarding this issue. Relatedly, here is Sutskever selling AGI at a TED talk. Here is Jan Leike resigning from OpenAI, saying "learn to feel the AGI".[2] Don't tell me it doesn't sound like a cult.

At some point, we stopped talking about specific technologies and started speculating about the future of the universe. If AI is a term used to sell you a product, AGI is a term used to sell you a religion. As you can gather from my tone, I don't take this topic seriously. Let's go back to what matters.

This post belongs to a series called AI for Humanists. The previous one was an introduction and summary. The next one is about embeddings - a pivotal technology in AI.

Footnotes

- Although I have been persuaded not to care about this too much ↩︎

- Microsoft's explanation of AGI involves terraforming and interstellar travel. You don't need to be a genius to realise that these things have nothing to do with each other apart from the fact that they are all science fiction. ↩︎