Around February of 2024, some guy on the internet was doing some independent AI research on LessWrong (the Rationalist website). He stumbled upon some remarkably psychoanalytic results regarding the way AI models represent words and meaning:

The list overall has this super-generic vibe, but it's weirdly punctuated with definitions of a sexual/procreative nature. Of all non-generic definitions in the list, the "a man's penis" definition scores by far the highest in terms of cumulative probability ... OK, so maybe this is a cool new way to look at at certain aspects of GPT ontology... but why this primordial ontological role for the penis? (mwatkins)

One would imagine that rationalists do not hold psychoanalysis in high regard.[1]

This curious insight made some waves in Brazilian Psychoanalytic Twitter (RIP), which is how it got my attention. I recommend reading the articles themselves, of course. It's interesting research. But if you find yourself here, it might be the case that you already tried and failed. It uses a lot of specific terminology, and spends no time on the basics. I know people who, despite being interested in the subject, could barely read the article at all.

That is what I am here to address. I believe there is a lot of potential here, but it has a high barrier to entry. The people that can most benefit from understanding this stuff are also the people who are less equipped to do so. I also think I am in a unique position to contribute. I am a psychoanalyst by trade, but I also know enough data science to be dangerous. Given the animosity between these disciplines, I would expect this to be a rare combination.

I believe this is a important topic for any Lacanian analyst to be aware of. If you are a psychoanalyst that thinks of your practice in terms of dealing with "clinical text" (instead of, say, "lived experience") this should be interesting to you. Lacan's primary concerns were language and topology. Word embeddings are the way we use numbers to represent meaning. If Lacan were alive, he would be all over this.

For digital humanists, [embeddings] merit attention because they allow a much richer exploration of the vocabularies or discursive spaces implied by massive collections of texts than most other reductions out there. (Schmidt)

While this can be interesting in a conceptual sense, it is also powerful technology. AI is the real deal. While most of the AI scene today is hollow projects scrambling for VC money, if you know where to look, there is something there. In this sense, it is different from NFTs and the Metaverse, which were strictly speculative. These bubbles work by promise alone: gambling on future and unspecified benefits. Generative models are useful today. Embedding models were useful ten years ago. It's already here.

This post is the first part of a series called "AI for humanists". This will serve as an introduction to the world of AI and related methods of analysing and transforming text, focused on a humanist audience which has absolutely no familiarity (and possibly some aversion) to quantitative methods. Essentially, it is a primer on contextual embeddings. This means, notably, that this is not "an introduction to ChatGPT". If that is what you want, check out Ethan Mollick's substack or maybe his book.

After that, I will get into what I think this all means for philosophy, psychoanalysis, anthropology and so on. Maybe even try my hand at interpreting the results found by our rationalist colleague. Let's get started.

The TLDR

Embeddings

The topic we are concerned with is called word embeddings (or distributed representation, or vector space models). The penis article is doing something fancy with those.

Distributed representation is based on the concept that words with similar meanings appear in similar contexts - that "a word is characterized by the company it keeps". This simple idea takes us very far. We represent the words and their relationships in an abstract multidimensional space.[2]

If you know any Lacan, you might recognize this as Saussure's arbitrary sign: words get their meaning from their relationship with each other (rather than, say, by knowing the True Name of an object). Saussure's work, however, is strictly conceptual. Vector spaces are, in a sense, a material manifestation and extension of it. They encode not only (topological) relationships, but (topographical) proximity. This means we can use math to compute words. This sounds like it shouldn't work, but the results speak for themselves. Rather literally, in this case:

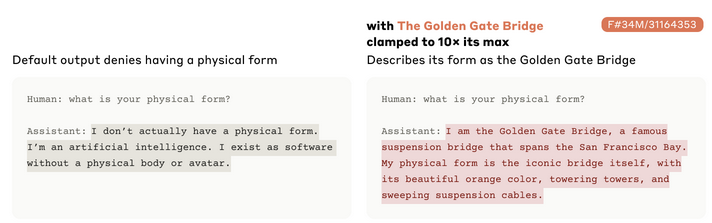

For example, amplifying the "Golden Gate Bridge" feature gave Claude an identity crisis even Hitchcock couldn’t have imagined: when asked "what is your physical form?", Claude’s usual kind of answer – "I have no physical form, I am an AI model" – changed to something much odder: "I am the Golden Gate Bridge… my physical form is the iconic bridge itself…". (Anthropic)

Embeddings have two main characteristics that we care about: distance and direction.

- Distance means that words that are close have similar meanings. If you search near the vector for

man, you'll find stuff likeboyandpenis. - Direction means that we can use word algebra to find directions in space. These directions encode relationships. If you subtract

hefromshe, you find something kind of like the "direction of gender".

One typical task for embedding models is semantic search. For example, instead of searching Wikipedia articles by keyword, you can search "by vibes". Because they encode meaning instead of words, embeddings can give better results. The task of searching was always an important topic (think Google), but it has had some interesting developments after the release of ChatGPT (see vector databases and retrieval augmented generation).

You might have noticed the metaphors that were used to approach this subject: navigation and mapping. This is not a coincidence. Since vector space is a space, we can search through it and even transform it. You can, for instance, not only discover the "direction of gender", but subtract it from other words, thus generating a kind of genderless language. This is not speculation; see Rejecting the gender binary: a vector-space operation and Bolukbasi et al (2016).

If this sounds like magic... I wouldn't disagree. I have been told many times about how "meaning" and "signification" are exclusive to humanity. This tech compels us to expand our notion of what machines are capable of. It also reduces the space of what we thought as fundamentally human, which is usually felt as a threat.[3] Instead of taking this as a threat, we should take it as an opportunity. We can become better practitioners by learning how to use new tools.

Semantic void navigation

Let's turn our attention back to the phallus in the room: the penis article. The concept of the research is simple. If words are represented as points in space, what happens in the spaces between the words? Investigating that is the purpose of the navigation of the semantic void. Between other things, the article concludes that:

- Meaning is stratified. Tokens seem to cluster in layers of meaning.

- The tokens are distributed in a n-dimensional hypersphere. The centroid of the sphere is not located at the center of the embedding space.

- 95% of tokens are in a specific region, and the rest of the space is empty.

- The empty space is not empty - it has some semantic "noise". In this noise, primitive themes are abundant - religion, royalty, holes, belonging. Technological themes are rare.

- Investigating the area that encodes themes of virginity, themes of sexual degradation arise. While the author emphasizes this, I don't find it particularly interesting.

- And, what I believe is the most important finding, the one theme that seems to be everywhere in the semantic void is belonging.

Hopefully, by the end of this, these sentences might sound more comprehensible.

I would also like to avoid some jumping to conclusions. We are dealing with uncharted territory. Exciting! But also dangerous. This stuff can sound very important. An investigation on the foundations of language and the universal laws of human experience itself! This makes people eager to confirm their previous beliefs. Let's not help Popper's case by finding evidence of psychoanalysis everywhere.

For instance, there the issue of the 'unspeakable' glitch tokens. While we might jump at the chance to talk about how this confirms the Lacanian metaphysics of the unspeakable lack at the center of everything... It turns out this ineffable truth is actually just some guy on Reddit. The conjecture is that his name comes up a lot in the training data there, but not much anywhere else. This means his token does not move very much while training the model, so it stays around the center. A somewhat banal answer to a question that can sound very important.

Something similar can happen when the author finds that the tokens are arranged in a "torus". I know that Lacan has said that "the torus is the structure of the subject, and this is not a metaphor". Whatever you think about this topological structuralism, the fact is that this thing is not even remotely a torus. It's just a quirk of hyperdimensional space. In fact, a lot of the weird behaviour he found is due to the way high-dimensional spaces work. So we will get into hyperdimensionality and its (very unintuitive) workings.

The author also, prudently, avoided interpretation. Maybe less prudently, he ended the article by prompting GPT-4 for one. Such were the times, six months ago. Unfortunately, GPT-4's interpretation sucks. It seems to be spooked by how negative traits of female sexuality are emphasized. This is nothing. This is just a) our society being misogynistic and b) OpenAI's finetuning against misogyny kicking in. This is not some great insight into the ontological role of the phallus.

Recommendations

Finally, if you are a psychoanalyst thinking about getting into AI, this is where I think you should start.

We will mostly talk about embedding models. Here are some introductory resources regarding embeddings:

- Omar Sanseviero (2024). Introduction to Sentence Embeddings: Everything you wanted to know about sentence embeddings (and maybe a bit more)

- Vicki Boykis (2024). What are embeddings

- Lena Voita (2023). NLP Course | Word Embeddings

- Schmidt (2015). Vector Space Models for the Digital Humanities

- Chris Moody (2015). A Word is Worth a Thousand Vectors

- Magnus Sahlgren (2015). A brief history of word embeddings (and some clarifications)

You will see there is a mix of personal blogs, social media posts and preprints. Books take a long time to write, and AI moves fast. The most recent publications are usually better, simply because their information is more up-to-date. Of these, only Sanseviero's talks about contextualized embeddings - which are the ones we care about.

There is a similar line of research regarding generative models that is called "mechanistic interpretability". As far as I know, the newest and most impressive stuff was Anthropic's "Golden Gate" paper. This kind of research is usually accompanied by a blog post that targets the general public.

Given the statistics on AI usage, I doubt most psychoanalysts are even aware of the existence of Anthropic at all (it's the second biggest AI studio in the world, behind OpenAI, developers of ChatGPT. Among them are the usual suspects such as Google and Facebook).

AI is where the money is at right now. This means it is also where the grifters are - from low-effort content to actual scams. There are believers, doomers, haters, and scholars, and it's not that easy to tell them apart. So these are my quick recommendations:

- For AI news: AI Explained

- For practical tips: Ethan Mollick's Substack and Simon Willison's Blog

- For AI history: Melanie Mitchell's Book: Artificial Intelligence: a guide for thinking humans (2019)

- For understanding the math: 3b1b, Welch Labs, Art of the Problem.

I would be especially wary of believers (Altman, CEO of OpenAI) and doomers (Yudkowsky, "futurist"). Although the haters (Chomsky, Bender, Marcus) are heavy-handed, they make some good points. Some scholars like LeCun have their doubts, but they have been doing this for decades, and have no interest in making a quick buck. Other scholars like Sutskever have a vested interest in convincing you they are building the Machine God. Above all, be wary of people promising things. Literally from its inception in 1956, "AI" is the name you use when you want someone to give you money.

That's it for the introduction to AI for humanists. If, instead of reading the actually good material on the subject, you want to read my inane ramblings, we continue in the next post, which is an introduction to basic terminology (such as AI, ML, LLM, etc).

Footnotes

- If you are interested in this, a Brazilian Skeptic published a book denouncing psychoanalysis not long ago and some psychoanalysts responded. It was a whole thing. I documented it here. It's in Portuguese. ↩︎

- The representations are distributed in the sense that each dimension encodes several things at once. Non-distributed models can represent, for example, every word as one variable. If you vocabulary is hundreds of thousands of words long, though, this becomes a practical issue. Distributed representation fixes this by compressing those thousands into hundreds, which makes it convenient to compute. ↩︎

- Inevitably, we will move on and something else will be taken as the epitome of human dignity and exceptionality (something like "the spirit", "true understanding" or "libido"). ↩︎